Malachy Xinyu Yang

They/Them

Ph.D. Candidate

Carnegie Mellon University

xinyuagi@cmu.edu

Welcome! I'm delighted to have you here on this page. Sending you a virtual but heartfelt greeting! 👋

I am a third-year Ph.D. student in InfiniAI Lab and Catalyst

and Catalyst at CMU

at CMU . I collaborate closely with Prof. Beidi Chen and Tianqi Chen at CMU, and Prof.

Huaxiu Yao at UNC

. I collaborate closely with Prof. Beidi Chen and Tianqi Chen at CMU, and Prof.

Huaxiu Yao at UNC .

Previously, I obtained my bachelar's degree from

ACM Honors Class,

Zhiyuan College,

Shanghai Jiao Tong University

.

Previously, I obtained my bachelar's degree from

ACM Honors Class,

Zhiyuan College,

Shanghai Jiao Tong University ,

where I conducted research with Prof.

Junchi Yan

at ThinkLab

,

where I conducted research with Prof.

Junchi Yan

at ThinkLab . I had a wonderful time through internships with Prof.

Song Han

in HAN Lab

. I had a wonderful time through internships with Prof.

Song Han

in HAN Lab at MIT

at MIT , Prof.

Chelsea Finn

in IRIS Lab

, Prof.

Chelsea Finn

in IRIS Lab at Stanford

at Stanford , and Dr. Luca Zancato at Amazon Web Services

, and Dr. Luca Zancato at Amazon Web Services .

.

Additionally, I am a passionate community builder, which I founded the series of Foundation Models in the Wild workshops. Please follow us on Twitter for the latest news, or join us on the Slack for workshop issues and discussions. I also lead-organize the Reliable and Responsible Foundation Models Workshop and the Advances in Sequence modeling from Algorithmic Perspectives (ASAP) Seminar Series.

News

Calendar

Research Highlights

My research is centered on the intersection of machine learning system and foundation model, with a specific focus on the development of scalable and generalizable foundation model systems in the wild. Recently, I am particularly interested in hardware-aware algorithm design with sub-linear complexity.

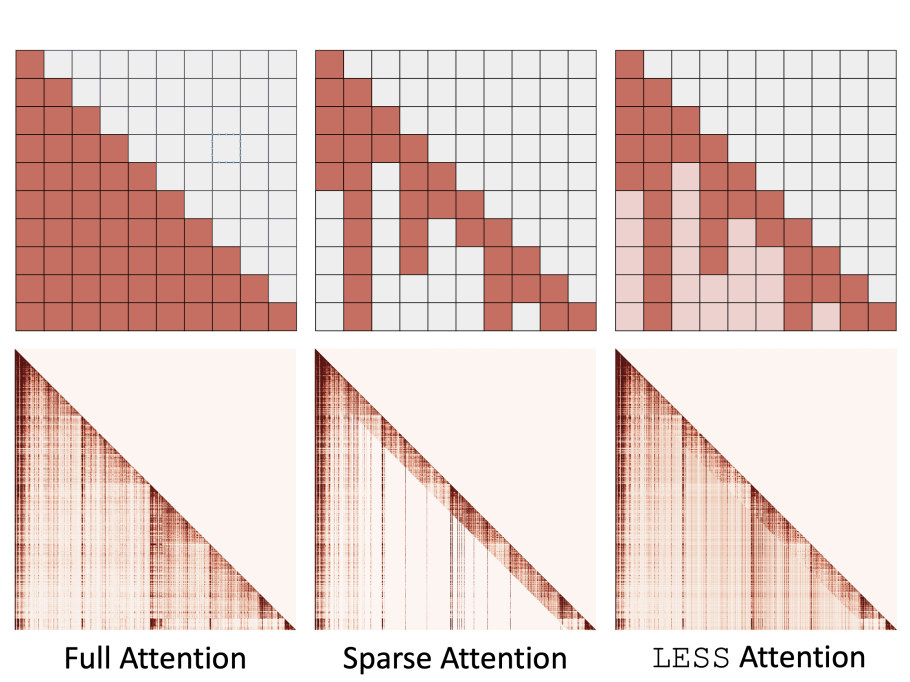

- Infinite-length Retrieval: For each single query, how can we retrieve relevant informtion from an $O(n)$-length contextual cache in foundation models using $O(\log(n))$ computations and positions?

- Infinite-depth Reasoning: An $O(n)$-depth reasoning problem requires either a Transformer with $O(\log(n))$-layer or $O(n)$-width. Can we achieve equivalent capabilities through repeating layers?

- Infinite-volume Memory: How to encode $O(n)$-volume knowledge into model parameters? The model size can grow linearly, but computational costs should be $O(\log(n))$ using sparse activation.

Additionally, I am fascinated with structural contextual cache architectures that transcend traditional sequential patterns. This is important for enhancing parallelism in agentic foundation models.

- Uni-directional and Bi-directional Relations: Typically, relations can be categorized as uni- or bi-directional. How can we seperate them with different attention masks and position embeddings?

- One-to-many and Many-to-one Relations: Current cache architectures only support one-to-many relations. How can we further enable many-to-one relations to enabling information aggregation?

- Static and Dynamic Relations: Current cache establishes static relations, but operations involve dynamic relations, as they might not depend on prior information or affect subsequent information.

If you would like to chat more about these topics, please feel free to email me to schedule a meeting.

Publications

Multiverse: Your Language Models Secretly Decide How to Parallelize and Merge Generation

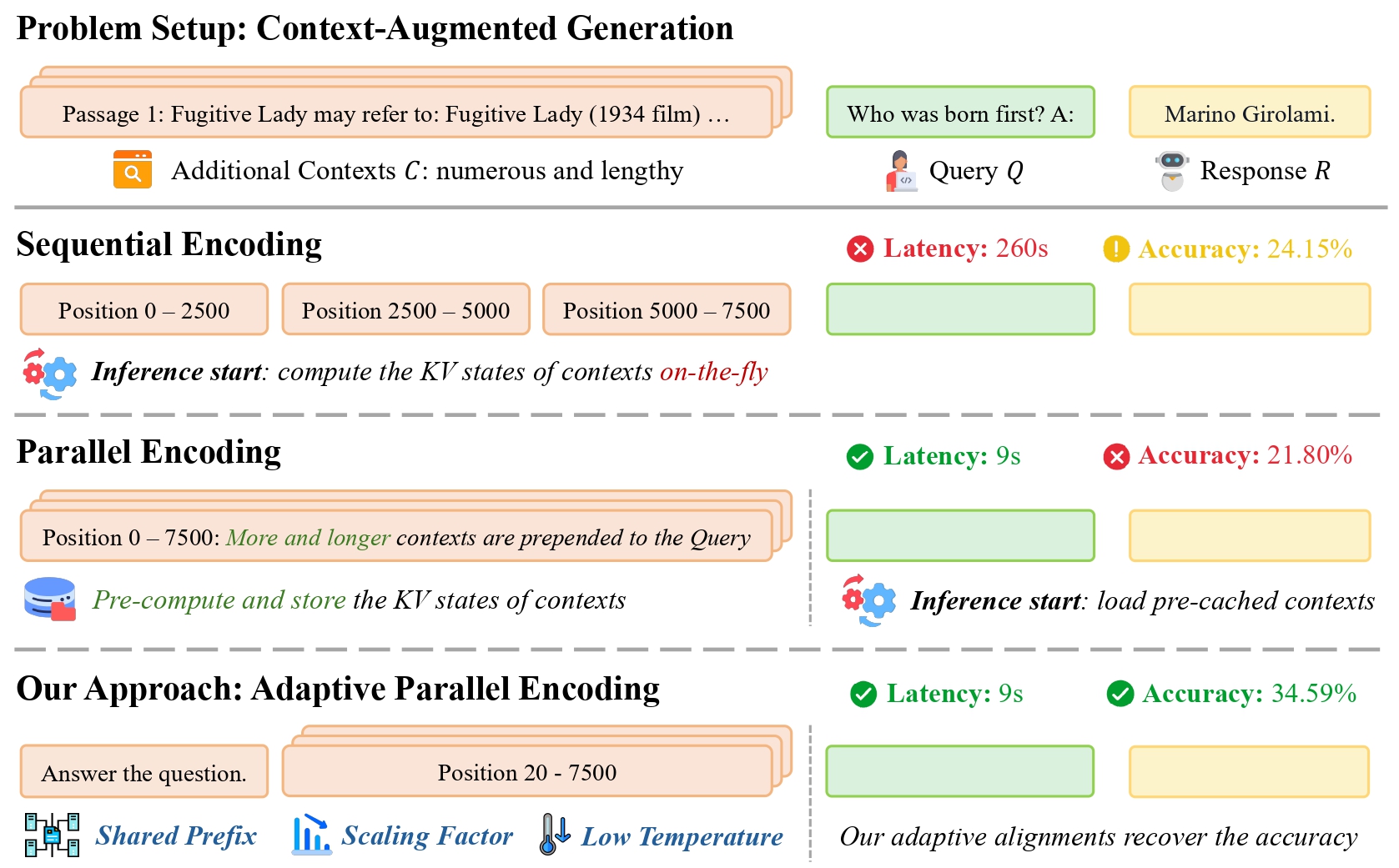

APE: Faster and Longer Context‑Augmented Generation via Adaptive Parallel Encoding

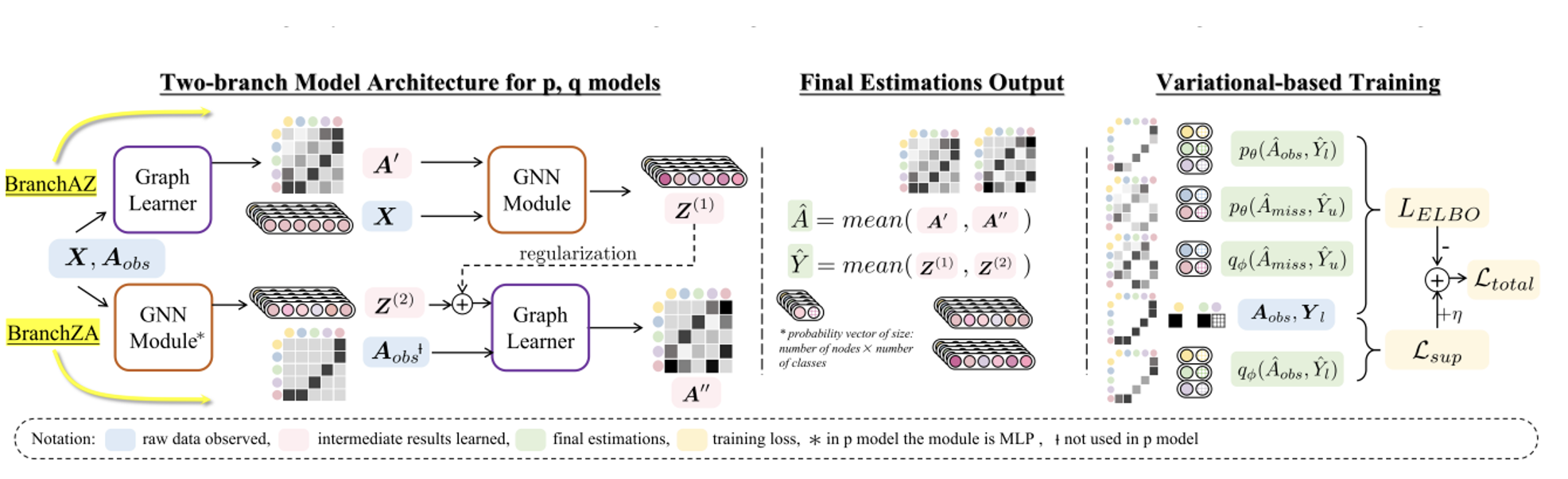

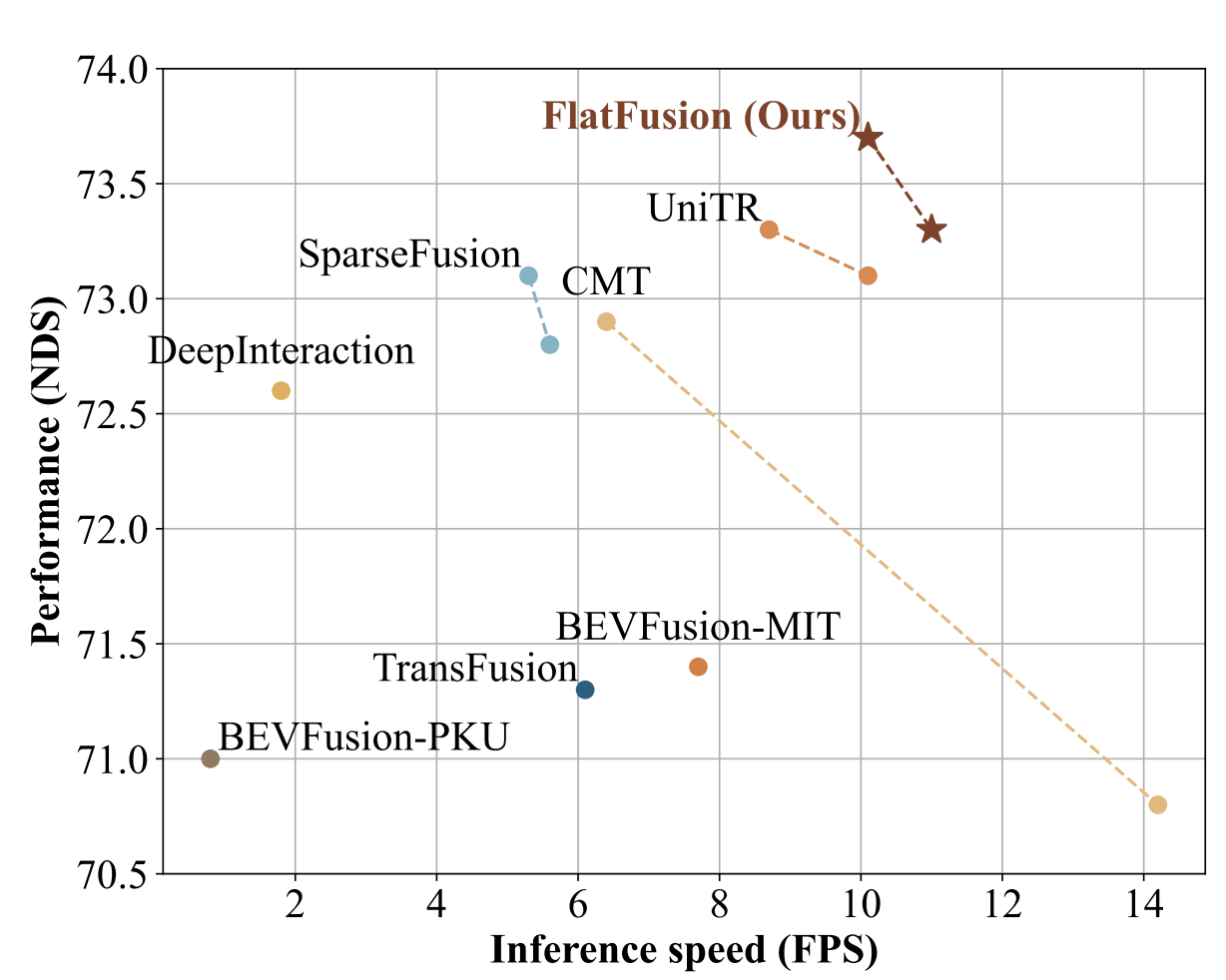

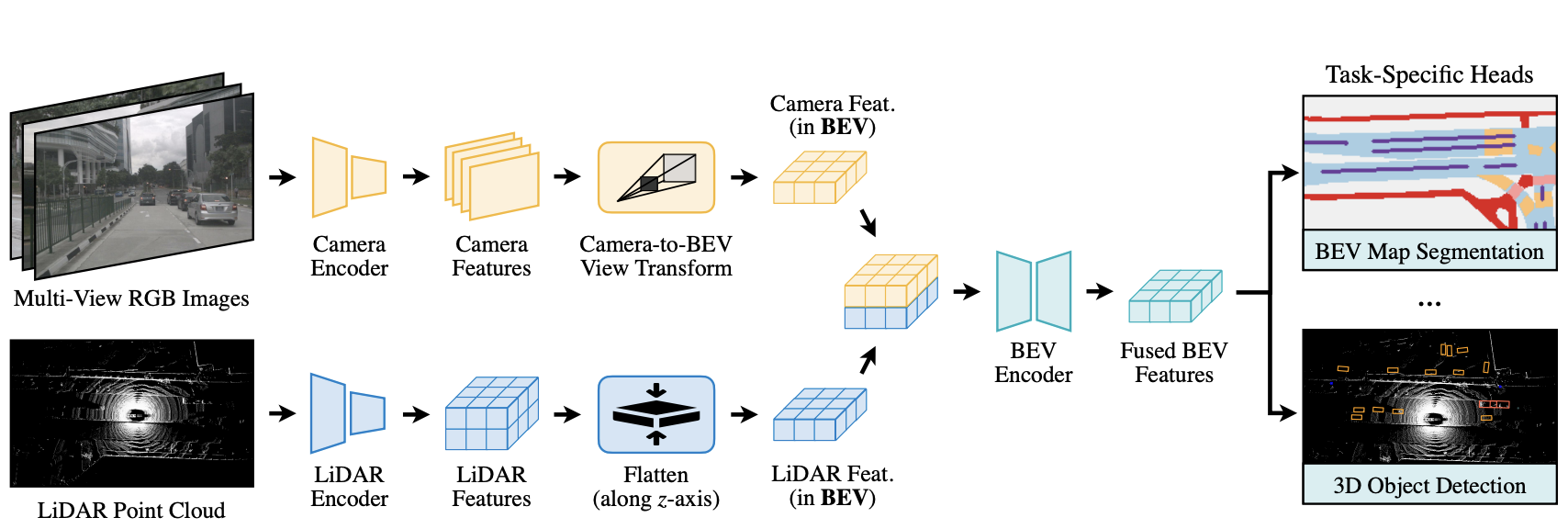

FlatFusion: Delving into Details of Sparse Transformer-based Camera-LiDAR Fusion for Autonomous Driving

LLM.265: Video Codecs are Secretly Tensor Codecs

It Takes Two: On the Seamlessness between Reward and Policy Model in RLHF

Zeroth-Order Fine-Tuning of LLMs with Extreme Sparsity

S2FT: Efficient, Scalable and Generalizable LLM Fine-tuning by Structured Sparsity

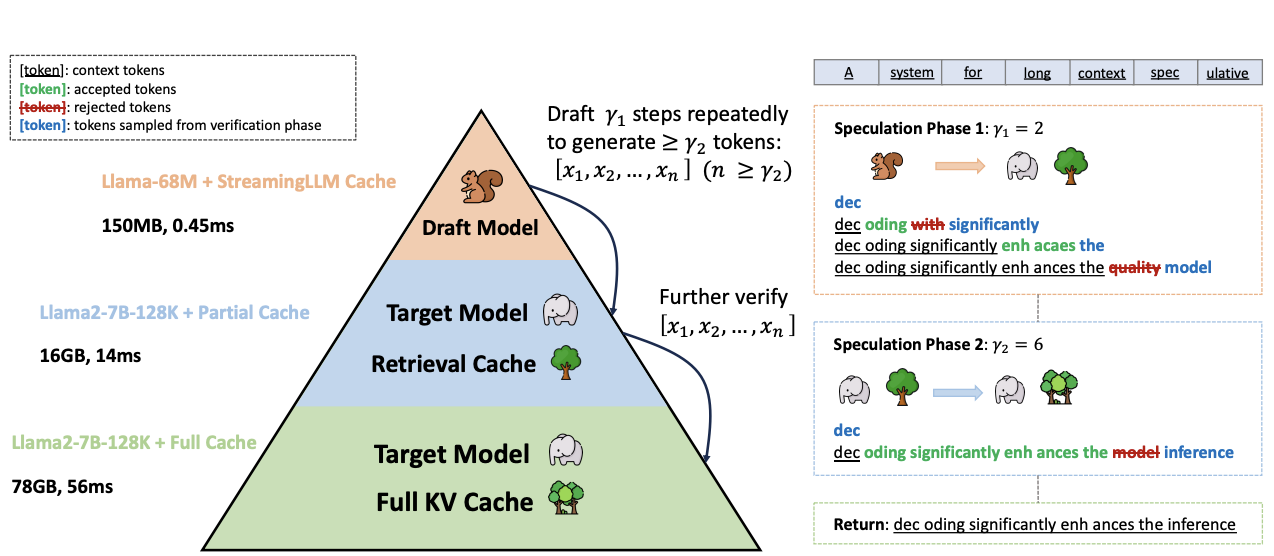

Triforce: Lossless Acceleration of Long Sequence Generation with Hierarchical Speculative Decoding

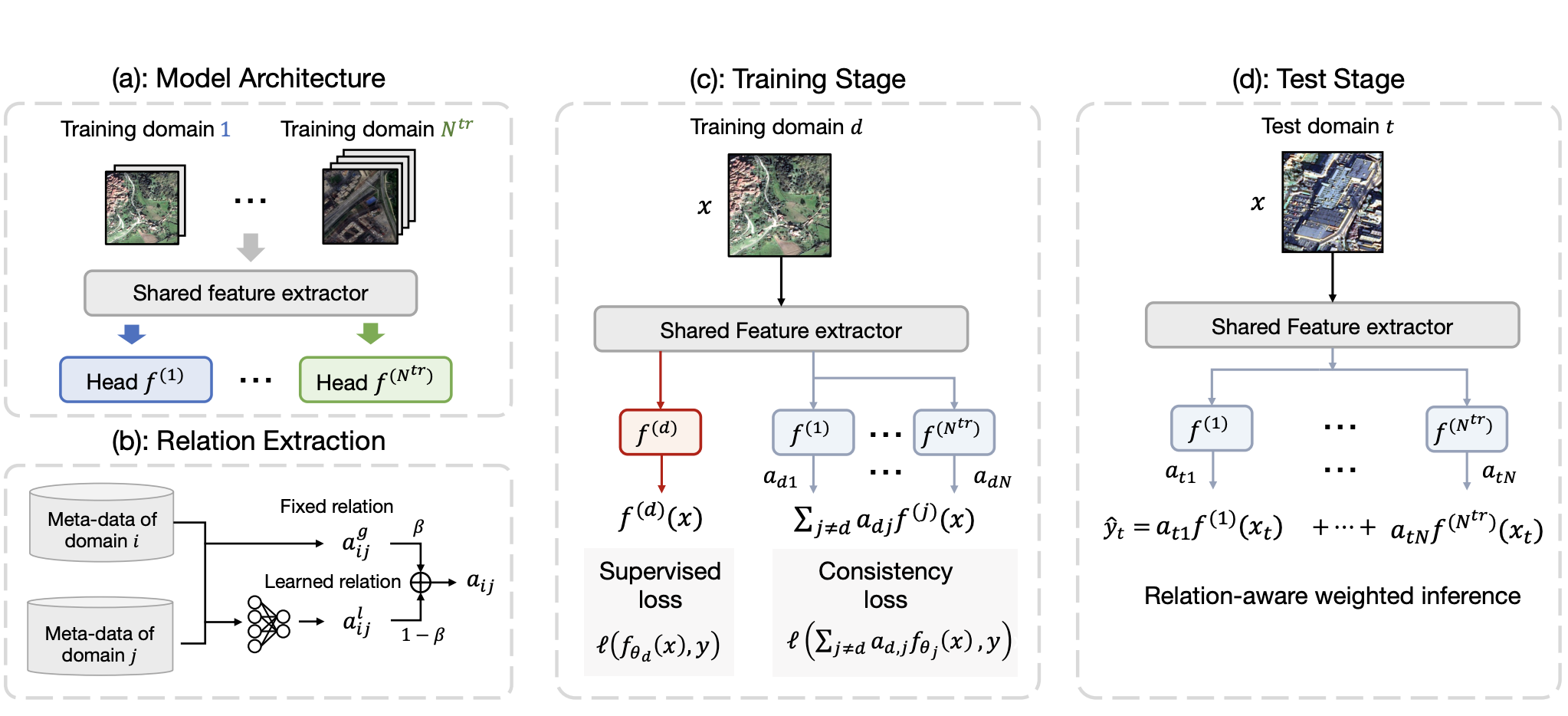

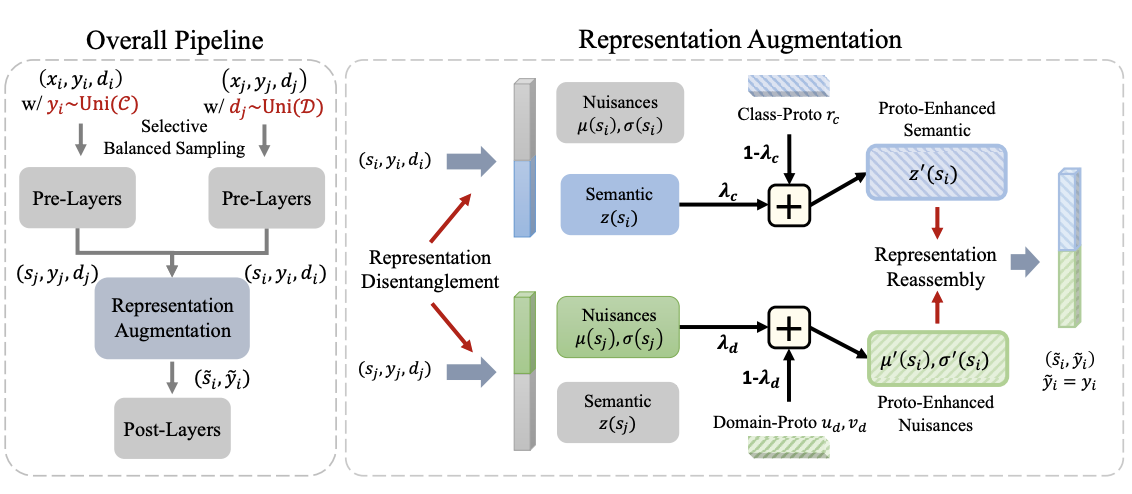

Improving Domain Generalization with Domain Relations

Bevfusion: Multi-Task Multi-Sensor Fusion with Unified Bird's-Eye View Representation

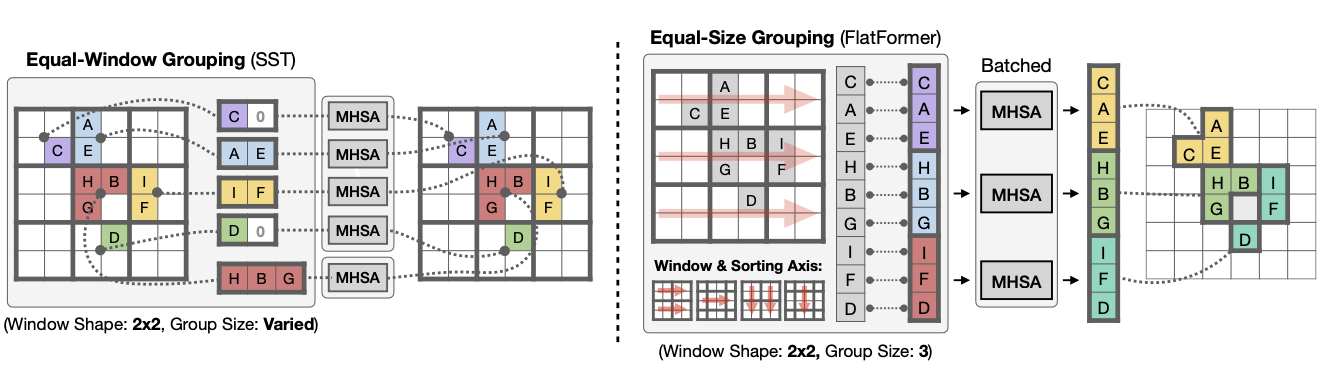

FlatFormer: Flattened Window Attention for Efficient Point Cloud Transformer

Experience

Education

Aug 2023 - Present

Research

Mar 2022 - Oct 2023

Nov 2021 - Jun 2023

Industry

May 2024 - Aug 2024

Service

Seminar Organization

- Lead Organizer, Advances in Sequence modeling from Algorithmic Perspectives

Workshop Organization

- Lead Organizer and Program Chair, The 1st Workshop on Efficient Reasoning, NeurIPS 2025

- Organizer, MMRAgI:Multi-Modal Reasoning for Agentic Intelligence, ICCV 2025

- Lead Organizer and Program Chair, The 2nd Workshop on Reliable and Responsible Foundation Models, ICML 2025

- Lead Organizer and Program Chair, The 2nd Workshop on Foundation Models in the Wild, ICLR 2025

- Lead Organizer and Program Chair, Workshop on Foundation Models in the Wild, ICML 2024

- Organizer, The 3rd Workshop for Out-of-Distribution Generalization in Computer Vision Foundation Models, ECCV 2024

Conference Review

- International Conference on Machine Learning (ICML), 2024-2025

- International Conference on Learning Representations (ICLR), 2025

- Conference on Neural Information Processing Systems (NeurIPS), 2024-2025

- Conference on Language Modeling (COLM), 2024

- Annual Meeting of the Association for Computational Linguistics (ACL), 2025

- Empirical Methods in Natural Language Processing (EMNLP), 2024

- IEEE International Conference on Robotics & Automation (ICRA), 2025

Journal Review

- Journal of Advanced Transportation (JAT)

- IEEE Transactions on Parallel and Distributed Systems (TPDS)

- IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)

- Transactions on Machine Learning Research (TMLR)

- IEEE Robotics and Automation Letters (RA-L)

Contact Me

Misc

- Before moving to the US, I studied and lived in Shanghai, China for the first two decades of my life.

- Aside from research, I am passionate about traveling, dining, gaming, shopping, anime and manga.